The data is in, the hours in the lab or in the field are over, and it’s time to tackle the statistical analysis of your data. But how to organise the data or notes or papers that form the core of the evidence for your research question?

As is so often the case, the answer could well be “it depends”. It depends little bit on what questions you want to ask your data, and it depends a little bit on what software you will use to extract summary statistics from that data.

However there are some basic principles that apply broadly to converting a pile of data into a spreadsheet ready for a variety of statistical analysis.

- Use Excel to enter your data. An Excel spreadsheet is flexible and can be read by many statistical analysis packages. You may not (indeed probably will not!) end up using Excel for the statistical analysis itself, but a .xls or .csv file is a portable and compact format for your data in the first instance.

- If there is only one data set, put it in the first worksheet, easy to see.

- Put your description of the study and data dictionary in Sheet 2. Then the information stays with the data and is also easy to find.

- If there two or more data sets, use separate files, or separate sheets. This will be how they are read into other software for analysis.

- Do not include graphs, charts, summary tables on the same sheet as the data. Other stats packages will not be able to read graphs or charts, and summary tables won’t be in the same format as the rest of the spreadsheet.

- Use one row of the worksheet for each subject in your data collection. For human studies, this is likely to mean one row per person. In biological experiments, one row per sample.

- Give an ID number to each subject. This will help for tracking subjects down later if you re-order the rows of the spreadsheet.

- Use one column for each characteristic measured on each experimental unit (e.g. sex, height). These are called variables.

- Make column names:

- Brief and informative. Try not to leave them as Q1, Q2, Q3 and so on. Some of us learnt data entry when variable names could only be 8 characters long and so brevity was forced on us! This is no longer the case, but on the other hand if the column name is “What is your usual address or residence whether owned by you or rented” that’s going to be hard to read in tables and graphs. Use the data dictionary on Sheet 2 to remind you of the full definition.

- With no spaces or other special characters. This will make it easier to read your spreadsheet in to different stats packages.

- Lower case, for ease of typing. With predictive text and copy-paste this is probably less important than it used to be.

- Consistent across different data files and sheets. This is important! You (or your statistical collaborator) will not thank you if the variable is called Gender in one spreadsheet, gender in another and GENDER in a third!

- Use only one Excel row for column names. Again, this will make it easier to read your spreadsheet in to different stats packages which will expect variables names to only occupy one row.

- Factor levels within a column can be names or numbers, such as 1, 2, 999 or “Yes”, “No”, “Unsure”.

- If using names, make them brief and informative. Check your typing, indeed it might be easier to use lower case for factor levels e.g. “yes”, “no”, “unsure”.

- Explain the names or numbers in the data dictionary. That’s the information on Sheet 2.

- Leave no blank cells in the worksheet by:

- Explicitly coding missing values. By default, R uses NA as a missing value indicator, GenStat uses *, SPSS uses a dot (.). There are exceptions to this rule but as a general rule of thumb it’s a good idea to fill in all the blanks so you can see where the missing values occur. Your statistical collaborator will be able to advise on alternatives.

- Downfilling cell contents where they are the same for successive subjects.

- If your data set includes any calculated variables, also include the variables from which they were calculated. For instance, you might have calculated BMI from height and weight, so leave the height and weight in. Or you might have split a numeric column into three parts – below normal range, in normal range, above normal range. Keep the original numbers there so that if you change your mind about where to put the splits, you can easily do that.

- Proofread your data before handing it on to other collaborators.

- Columns containing numbers – use histograms, scatterplots, or boxplots to check the values you have typed in. Don’t forget to delete the charts before you finish (see point 1)!

- Columns containing factors – use tables or barcharts.

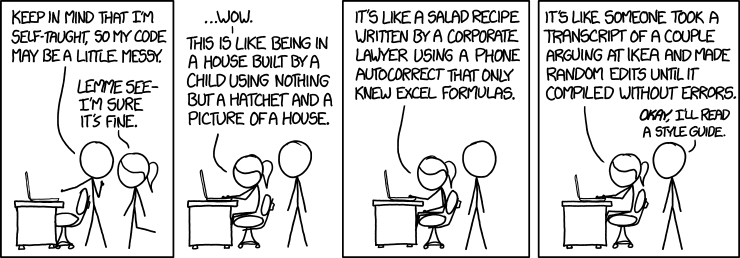

You may have seen this cautionary tale about what can happen if you don’t do all of these things – it’s well worth linking to here!

A short version of these guidelines is also available here.

Associate Professor Alice Richardson is Lead of the Statistical Support Network at the Australian National University. Her research interests are in linear models and robust statistics; statistical properties of data mining methods; and innovation in statistics education. In her role at the SSN she applies statistical methods to large and small data sets, especially for research questions in population health and the biomedical sciences.